See https://github.com/slott56/cpx-xmas-ornament

You'll need a Circuit Playground Express https://www.adafruit.com/product/3333

Install the code. Enjoy the noise and blinky lights.

The MML translation isn't as complete as you might like. The upper/lower case for the various commands isn't handled quite as cleanly as it could be. AFAIK, case shouldn't matter, but I omitted any lower() functions, making the MML parser case sensitive. It only mattered for one of the four songs, and it was easier to edit the song.

The processing leaves a great deal of "clickiness" in the start_tone() processing. I think I know how to address it.

There are barely 96 or so different tones available in MML compositions. It might be possible to generate the wave shapes in advance to have a smoother music experience.

One could image having an off-line translator to transform the MML text into a sequence of bytes with note number and duration. This would slightly compress the song, but would speed up processing by eliminating the overhead of parsing.

Additionally, having 96 wave tables could speed up tone production. The tiny bit of time to recompute the sine wave at a given frequency would be eliminated. But. Memory is limited.

Rants on the daily grind of building software. This has been moved to https://slott56.github.io. Fix your bookmarks.

Moved

Moved. See https://slott56.github.io. All new content goes to the new site. This is a legacy, and will likely be dropped five years after the last post in Jan 2023.

Tuesday, December 31, 2019

Tuesday, December 24, 2019

Walrusing Around

This is -- well -- it is what it is. I don't have to like it.

It works and shows how the assignment operator works.

The point here is to convert a timestamp into ISO week, day, hour, minute, second. 13th week, 2nd day, 7h, 53m, 19s.

The divmod() function returns a two-tuple, which the assignment operator can't decompose. Instead, we decompose it by wrapping the whole thing in ()[1].

Works.

Do Not Recommend.

>>> t_s = (8063599, 0) >>> fields = [(t_s := divmod(t_s[0], b))[1] for b in (60, 60, 24, 7)] >>> list(reversed(fields + [t_s[0]])) [13, 2, 7, 53, 19]

It works and shows how the assignment operator works.

The point here is to convert a timestamp into ISO week, day, hour, minute, second. 13th week, 2nd day, 7h, 53m, 19s.

The divmod() function returns a two-tuple, which the assignment operator can't decompose. Instead, we decompose it by wrapping the whole thing in ()[1].

Works.

Do Not Recommend.

Tuesday, December 17, 2019

Plannng a Linked-in Learning Course (and using the := walrus operator)

I've recorded two courses for LinkedIn Learning https://www.linkedin.com/learning/me

Let me emphasize that their production values take a lot of work. While I think I'm a pretty good live presenter, a few days in the recording booth with a producer, reveals all my weaknesses. so. um. you know?

I'm starting down the road to at least one more, maybe another one or two after that.

Which leads to code. Of course. And the code uses the assignment expression ("walrus") operator.

Here's what's going on. I've got a directory full of CSV files with the slide-by-slide scripts. Each file has a bunch of tabs, and the relevant tables have a fixed heading that the production folks use.

target_headings = ['Part', 'Voice', 'Visual Description', 'Storyboard / Description']

The "Voice" column in these tables is the script. Each row is a slide or other visual. The overall management of the resources with all of these spreadsheets doesn't seem ideal to me. However, it's the way skilled professionals prefer to manage these multi-media assets.

The question is: "which sections are too long?"

Generally, we speak at a consistent rate. During rehearsals, I can use my stopwatch to get timing for a particular script. This gives me a seconds/word or words/second rate metric. Given an average rate, and a script, I can predict a likely duration given the text of the script.

The data is in spreadsheets -- generally the root cause of many complications. There's no word-count in Numbers. So. Time to apply Python. (I'm sure someone has a bunch of Excel macros that can do word-counts. Good for you. I don't own a copy of Excel.)

Here's how this shakes out. There are three parts to the analysis, modeling, and applying the model. The first is a functional flattener to turn all of the files and tabs and tables into a single stream of useful rows.

Here's how this starts.

The outermost for statement locates all .csv files. All the rows within a file will belong to a number of sheets and tables within each sheet. The separator is a line with a Sheet: Table string, described by the sheet_table_pattern. The second for statement picks all the rows from a given sheet, looking for the separators.

There are a bunch of irrelevant tables. Hence the tall stack of if-statements. The useful parts of the script all have names that start with 'Table '. Weird, but true. The match.group(2).startswith('Table ') check feels like some casual ad-hoc test and should probably be made more visible and configurable.

Once we've found a table with the right headings, we can iterate over the following rows until we get to a blank line at end-of-table. We accumulate a dictionary, named text, which has the 'Part' and 'Voice' column values as a handy Dict[str, str] mapping.

Note that we're sharing an iterator, the row_iter variable, among two for statements. This is a very handy trick when doing this kind of partitioning. The outermost use of the iterator is rejecting irrelevant rows. The inner use of the iterator is assembling composite objects from a subset of rows, effectively partitioning the raw data.

This *can* be decomposed into separate functions. Further refactoring is left as an exercise for the reader.

Here's the benchmarking to create a model.

For some sample sections, I read through the material in my best NPR professional broadcasting voice. The sums of words and times give us a time-per-word Fraction object. The resulting value is near 31 seconds for 75 words.

I really like using Fraction instead of float for this kind of thing. The data doesn't support even one decimal place of supposed accuracy.

Note that I didn't factor in any slide count. I assumed this is a linear model from words to time. If I was a real scientist I might have tried a bunch of models.

There are three mappings going on here. This makes it a little tricky to create a simple function to map from raw data to something the model can use, then applying the model.

The 'word_count' is a mapping from raw data to one feature. The 'slide_count' is another mapping from raw data to a secondary feature. The 'm' and 's' values represent another mapping from the word_count to the estimated time.

We can hack this around to find another use for the assignment operator. But the following seems insane:

Let's not consider this assignment expression example as particularly helpful. The above turns two simple statements into a mess.

Something like this is an alternative that's slightly more functional.

I'm not sure this is dramatically "better". It isolates some aspects of feature collection and model application. It also harbors a secret inefficiency. The two feature values should be cached to avoid recomputing them.

I'll leave the refactoring for the interested reader.

The durations over > 5:00 (300 seconds) need some rework. That's the actual useful output: the list of scripts with excessive time becomes the queue of content that needs rework.

The question is: "which sections are too long?"

Generally, we speak at a consistent rate. During rehearsals, I can use my stopwatch to get timing for a particular script. This gives me a seconds/word or words/second rate metric. Given an average rate, and a script, I can predict a likely duration given the text of the script.

The data is in spreadsheets -- generally the root cause of many complications. There's no word-count in Numbers. So. Time to apply Python. (I'm sure someone has a bunch of Excel macros that can do word-counts. Good for you. I don't own a copy of Excel.)

Here's how this shakes out. There are three parts to the analysis, modeling, and applying the model. The first is a functional flattener to turn all of the files and tabs and tables into a single stream of useful rows.

The Data Gathering

The essential data gathering has to flatten the relatively complex file/sheet/table structure into something we can extract features from. A sequence of the final text of the scripts is what we want. Each script can be a mapping from the slide label to the voice content. It's this content -- the script text -- where we'll find the interesting features.Here's how this starts.

from pathlib import Path

from fractions import Fraction

import csv

import re

from typing import Tuple, Dict, Iterator, List

sheet_table_pattern = re.compile(f"^(\w+): (.+)$")

target_headings = ['Part', 'Voice', 'Visual Description', 'Storyboard / Description']

def script_iter(source: Path) -> Iterator[Tuple[str, Dict[str, str]]]:

for script_path in sorted(source.glob("*.csv")):

# print(script_path)

with script_path.open() as script_file:

reader = csv.reader(script_file)

row_iter = iter(reader)

for row in row_iter:

if len(row) == 1 and (match := sheet_table_pattern.match(row[0])):

if match and match.group(2).startswith('Table '):

headings = next(row_iter)

if headings == target_headings:

section = match.group(1)

text = {}

# print(f"Analyzing {section}")

for sub_row in row_iter:

if len(sub_row) == 0:

break

dict_sub_row = dict(zip(headings, sub_row))

text[dict_sub_row['Part']] = dict_sub_row['Voice']

yield section, text

The outermost for statement locates all .csv files. All the rows within a file will belong to a number of sheets and tables within each sheet. The separator is a line with a Sheet: Table string, described by the sheet_table_pattern. The second for statement picks all the rows from a given sheet, looking for the separators.

There are a bunch of irrelevant tables. Hence the tall stack of if-statements. The useful parts of the script all have names that start with 'Table '. Weird, but true. The match.group(2).startswith('Table ') check feels like some casual ad-hoc test and should probably be made more visible and configurable.

Once we've found a table with the right headings, we can iterate over the following rows until we get to a blank line at end-of-table. We accumulate a dictionary, named text, which has the 'Part' and 'Voice' column values as a handy Dict[str, str] mapping.

Note that we're sharing an iterator, the row_iter variable, among two for statements. This is a very handy trick when doing this kind of partitioning. The outermost use of the iterator is rejecting irrelevant rows. The inner use of the iterator is assembling composite objects from a subset of rows, effectively partitioning the raw data.

This *can* be decomposed into separate functions. Further refactoring is left as an exercise for the reader.

The Benchmark Data

The result of benchmarking is a Fraction object with my unique reading pace. And yes, a Fraction makes more sense than a float value. We're working in int space, and introducing float seems wrong.Here's the benchmarking to create a model.

def rate() -> Fraction:

Benchmarks = [

{'time': 3*60 + 29, 'words': 568}, # 01_01

{'time': 5*60 + 32, 'words': 732}, # 01_04

{'time': 5*60 + 54, 'words': 985}, # 02_04

{'time': 4*60 + 58, 'words': 663}, # 02_05

{'time': 8*60 + 48, 'words': 1192}, # 03_02 (draft)

]

time_bm = sum(b['time'] for b in Benchmarks)

words_bm = sum(b['words'] for b in Benchmarks)

time_per_word = Fraction(time_bm/words_bm)

return time_per_word

For some sample sections, I read through the material in my best NPR professional broadcasting voice. The sums of words and times give us a time-per-word Fraction object. The resulting value is near 31 seconds for 75 words.

I really like using Fraction instead of float for this kind of thing. The data doesn't support even one decimal place of supposed accuracy.

Note that I didn't factor in any slide count. I assumed this is a linear model from words to time. If I was a real scientist I might have tried a bunch of models.

Applying the Model

The model is linear. It's a scaling factor applied to a specific feature, the number of words. Here's one version of the code. I'm not sure I like it.def main() -> None:

time_per_word = rate()

source = Path.cwd()

print(f"script, slides, words, time")

for script, body in script_iter(source):

word_count = sum(len(text.split()) for text in body.values())

slide_count = sum(1 for text in body.values() if len(text) > 0)

m, s = divmod(int(word_count*time_per_word), 60)

print(f"{script}, {slide_count}, {word_count}, {m}:{s:02d}")

There are three mappings going on here. This makes it a little tricky to create a simple function to map from raw data to something the model can use, then applying the model.

The 'word_count' is a mapping from raw data to one feature. The 'slide_count' is another mapping from raw data to a secondary feature. The 'm' and 's' values represent another mapping from the word_count to the estimated time.

We can hack this around to find another use for the assignment operator. But the following seems insane:

divmod(int(word_count:=sum(len(text.split()) for text in body.values())*time_per_word), 60)

Let's not consider this assignment expression example as particularly helpful. The above turns two simple statements into a mess.

Alternative Implementation

The relationships among the mappings can be built a pure functional programming, but seems flirt with needless complexity. We can have a pair of functions to map the body.values() to some named tuple with feature values. We can use a third function to apply the model.Something like this is an alternative that's slightly more functional.

class Features(NamedTuple):

body: Dict[str, str]

@property

def word_count(self) -> int:

return sum(len(text.split()) for text in self.body.values())

@property

def slide_count(self) -> int:

return sum(1 for text in self.body.values() if len(text) > 0)

def duration(self, time_per_word: Fraction) -> int:

return int(self.word_count*time_per_word)

def main_2() -> None:

time_per_word = rate()

source = Path.cwd()

print(f"script, slides, words, time")

for script, body in script_iter(source):

details = Features(body)

m, s = divmod(details.duration(time_per_word), 60)

print(f"{script}, {details.slide_count}, {details.word_count}, {m}:{s:02d}")

I'm not sure this is dramatically "better". It isolates some aspects of feature collection and model application. It also harbors a secret inefficiency. The two feature values should be cached to avoid recomputing them.

I'll leave the refactoring for the interested reader.

The durations over > 5:00 (300 seconds) need some rework. That's the actual useful output: the list of scripts with excessive time becomes the queue of content that needs rework.

Wednesday, December 4, 2019

Creating Palindromes -- if possible -- from a string of letters.

This can be an interesting exercise. I think it is something that can help people learn to code well. I found this in the LinkedIn Python community: https://www.linkedin.com/groups/25827/.

The Palindrome Problem:

Make a function that makes a palindrome out of the letters in a string and

returns -1 if this is not possible.

Convert a list of strings with the function.

Some test cases:

>>> palify('eedd')

'edde' (or 'deed')

>>> palify('wgerar')

>>> palify('uiuiqii')

'uiiqiiu' or several similar variants.

Let's not get too carried away. I like *some* of this problem.

I don't like the idea of Union[str, int] as a return type from this function. Yes, it's valid Python, but it seems like a code smell. Since the intent is to build lists, a None would be more sensible than a number; we'd have Optional[str] which seems better overall.

The solution that was posted was interesting. It did way too much work, but it was acceptable-looking Python. (It started with a big block comment with "#" on each line instead of a docstring, so... there were minor style problems, but otherwise, it was not bad.)

Here's what popped into my head, to act as a concrete response to the request for comments.

"""

Make a function that makes a palindrome out of the letters in a string and

returns -1 if this is not possible.

Convert a list of strings with the function.

Some test cases:

>>> palify('eedd')

'edde'

>>> palify('wgerar')

>>> palify('uiuiqii')

'uiiqiiu'

"""

from typing import Optional, Set

def palify(source: str) -> Optional[str]:

"""Core palindromic conversion."""

singletons: Set[str] = set()

pairs = list()

for c in source:

if c in singletons:

pairs.append(c)

singletons.remove(c)

else:

singletons.add(c)

if pairs and len(singletons) <= 1:

# presuming a single letter can't be palindromic.

return ''.join(pairs+list(singletons)+pairs[::-1])

return None

if __name__ == "__main__":

s = ['eedd', 'wgerar', 'uiuiqii']

p = list(map(palify, s))

print(f"from {s=}, we get {p=}")

The core problem statement is interesting. And the ancillary requirement is almost as interesting as the problem.

The simple-seeming "Make a palindrome out of the letters of the string" has two parts. First, there's the question of "can it even become a palindrome"? Which implies validating the source data against some set of rules. After that, we have to emit one of the many possible palindromes from the source material.

The original post had a complicated survey of the data. This was followed by an elegant way of creating a palindrome from the survey data. Since we're looking for a bunch of pairs and a singleton, I elided the more complex survey and opted to collect pairs and singletons into two separate collections.

When we've consumed the input, we will have partitioned the characters into their two pools and we can decide if the pools have the right sizes to proceed. The emission of the palindrome is a lazy assembly of the resulting data, first as a list, and then transformed to a single string.

The ancillary requirement is interesting in its own right. When a bundle of letters can't form a palindrome, that seems like a ValueError exception to me. Doing bulk transformations in the presence of ValueErrors seems wrong-ish. I already griefed about the -1 response above: it seems very bad. A None is less bad than -1. An Exception, however, seems like a more right thing to do.

Code Review Response

I think my response to the original code should be follow-up questions on why a defaultdict(int) was used to survey the data in the first place. A Counter() is a better idea, and requires less code.The survey involved trying to locate singletons -- a laudable goal. There may have been a better approach to looking for the presence of a singleton letter in the Counter values.

More fundamentally, there are few states for each letter. There are two stark algorithmic choices: a structure keyed by letter or collections of letters. I've shown the collections, and hinted at the collection. The student response used a collection.

I think this problem serves as a good discussion for algorithmic alternatives. The core problem of detecting the possibility of palindromicity for a bunch of letters is cool. There are two choices. The handling of the exceptional case (-1, None or ValueError) is another bundle of choices.

Tuesday, December 3, 2019

Functional programming design pattern: Nested Iterators == Flattening

Here's a functional programming design pattern I uncovered. This may not be news to you, but it was a surprise to me. It cropped up when looking at something that needs parallelization to reduced the elapsed run time.

Consider this data collection process.

Consider this data collection process.

for h in some_high_level_collection(arg1):

for l in h.some_low_level_collection(arg2):

if some_filter(l):

logger.info("Processing %s %s", h, l)

some_function(h, l)

This is pretty common in devops world. You might be looking at all repositories in all github organizations. You might be looking at all keys in all AWS S3 buckets under a specific account. You might be looking at all tables owned by all schemas in a database.

It's helpful -- for the moment -- to stay away from taller tree structures like the file system. Traversing the file system involves recursion, and the pattern is slightly different there. We'll get to it, but what made this clear to me was a "simpler" walk through a two-layer hierarchy.

The nested for-statements aren't really ideal. We can't apply any itertools techniques here. We can't trivially change this to a multiprocessing.map().

In fact, the more we look at this, the worse it is.

Here's something that's a little easier to work with:

Here's something that's a little easier to work with:

def h_l_iter(arg1, arg2):

for h in some_high_level_collection(arg1):

for l in h.some_low_level_collection(arg2):

if some_filter(l):

logger.info("Processing %s %s", h, l)

yield h, l

itertools.starmap(some_function, h_l_iter(arg1, arg2))

The data gathering has expanded to a few more lines of code. It gained a lot of flexibility. Once we have something that can be used with starmap, it can also be used with other itertools functions to do additional processing steps without breaking the loops into horrible pieces.

I think the pattern here is a kind of "Flattened Map" transformation. The initial design, with nested loops wrapping a process wasn't a good plan. A better plan is to think of the nested loops as a way to flatten the two tiers of the hierarchy into a single iterator. Then a mapping can be applied to process each item from that flat iterator.

I think the pattern here is a kind of "Flattened Map" transformation. The initial design, with nested loops wrapping a process wasn't a good plan. A better plan is to think of the nested loops as a way to flatten the two tiers of the hierarchy into a single iterator. Then a mapping can be applied to process each item from that flat iterator.

Extracting the Filter

We can now tease apart the nested loops to expose the filter. In the version above, the body of the h_l_iter() function binds log-writing with the yield. If we take those two apart, we gain the flexibility of being able to change the filter (or the logging) without an awfully complex rewrite.T = TypeVar('T')

def logging_iter(source: Iterable[T]) -> Iterator[T]:

for item in source:

logger.info("Processing %s", item)

yield item

def h_l_iter(arg1, arg2):

for h in some_high_level_collection(arg1):

for l in h.some_low_level_collection(arg2):

yield h, l

raw_data = h_l_iter(arg1, arg2)

filtered_subset = logging_iter(filter(some_filter, raw_data))

itertools.starmap(some_function, filtered_subset)

Yes, this is still longer, but all of the details are now exposed in a way that lets me change filters without further breakage.

Now, I can introduce various forms of multiprocessing to improve concurrency.

This transformed a hard-wired set of nest loops, if, and function evaluation into a "Flattener" that can be combined with off-the shelf filtering and mapping functions.

I've snuck in a kind of "tee" operation that writes an iterable sequence to a log. This can be injected at any point in the processing.

Logging the entire "item" value isn't really a great idea. Another mapping is required to create sensible log messages from each item. I've left that out to keep this exposition more focused.

Now, I can introduce various forms of multiprocessing to improve concurrency.

This transformed a hard-wired set of nest loops, if, and function evaluation into a "Flattener" that can be combined with off-the shelf filtering and mapping functions.

I've snuck in a kind of "tee" operation that writes an iterable sequence to a log. This can be injected at any point in the processing.

Logging the entire "item" value isn't really a great idea. Another mapping is required to create sensible log messages from each item. I've left that out to keep this exposition more focused.

I'm sure others have seen this pattern, but it was eye-opening to me.

This simplification doesn't add much value, but it seems to be general truth. In Python, it's a small change in syntax and therefore, an easy optimization to make.

Full Flattening

The h_l_iter() function is actually a generator expression. A function isn't needed.h_l_iter = (

(h, l)

for h in some_high_level_collection(arg1)

for l in h.some_low_level_collection(arg2)

)

This simplification doesn't add much value, but it seems to be general truth. In Python, it's a small change in syntax and therefore, an easy optimization to make.

What About The File System?

When we're working with some a more deeply-nested structure, like the File System, we'll make a small change. We'll replace the h_l_iter() function with a recursive_walk() function.

def recursive_walk(path: Path) -> Iterator[Path]:

for item in path.glob():

if item.is_file():

yield item

elif item.is_dir():

yield from recursive_walk(item)

This function has, effectively the same signature as h_l_iter(). It walks a complex structure yielding a flat sequence of items. The other functions used for filtering, logging, and processing don't change, allowing us to build new features from various combinations of these functions.

This pattern works for simple, flat items, nested structures, and even recursively-defined trees. It introduces flexibility with no real cost.

The other pattern in play is:

These felt like blinding revelations to me.

tl;dr

The too-long version of this is:

Replace for item in iter: process(item) with map(process, iter).

This pattern works for simple, flat items, nested structures, and even recursively-defined trees. It introduces flexibility with no real cost.

The other pattern in play is:

Any for item in iter: for sub-item in item: processing is "flattening" a hierarchy into a sequence. Replace it with (sub-item for item in iter for sub-item in item).

These felt like blinding revelations to me.

Tuesday, November 26, 2019

Refactoring

Follow my Patreon: Become a Patron!

I'll try to focus on my Building Skills in OO Design book there. I'm thinking of adding some more code examples. Is that a good idea?

Maybe that should be the higher-level Patreon benefit?

I'll try to focus on my Building Skills in OO Design book there. I'm thinking of adding some more code examples. Is that a good idea?

Maybe that should be the higher-level Patreon benefit?

Tuesday, November 19, 2019

Python 3.8 features

| Real Python (@realpython) |

|

📺🐍 Cool New Features in Python 3.8

realpython.com/courses/cool-n… | |

I'm learning a lot about my previously sketchy designs and potential problems with some of them. There are a number of things in Python which "work" in a vague hand-wavey way. But they don't work in a "I can convince mypy this will work" way.

The additional "convince mypy" rigor can separate potentially sketchy design from an unassailable design.

Tuesday, November 12, 2019

Python 2 Remediation

https://medium.com/capital-one-tech/python-2-remediation-and-problems-at-sea-a58e805d6468

We're about out of time for this effort.

We're about out of time for this effort.

Tuesday, October 29, 2019

Building Skills in OO Design

See https://www.patreon.com/posts/30995708

I've (finally) gotten the book content upgraded to Python 3.7.

I've also deleted all the previous versions of the book. I had been keeping them on my web server because -- well -- because I don't know why. They go back to at least 2011, some of the content may be even older than that.

I've also deleted some previous self-published content.

(I started writing about Python almost 20 years ago. Some of the content could have been that old. It deserves to be deleted.)

I've (finally) gotten the book content upgraded to Python 3.7.

I've also deleted all the previous versions of the book. I had been keeping them on my web server because -- well -- because I don't know why. They go back to at least 2011, some of the content may be even older than that.

I've also deleted some previous self-published content.

(I started writing about Python almost 20 years ago. Some of the content could have been that old. It deserves to be deleted.)

Tuesday, October 22, 2019

State Change and NoSQL Databases

Let's take another look at F. L. Stevens spreadsheet with agencies and agents. It's -- of course -- an unholy mess. Why? It's difficult to handle state change and deduplication.

Let's look at state changes.

The author needs URL's and names and a list of genres the agent is interested in. This is more-or-less static data. It changes rarely. What changes more often is an agent being closed or open to queries.

Another state change is the query itself. Once the email has been sent, the agent (and their agency) should not be bothered again for at least sixty days. After an explicit rejection, there's little point in making any contact with the agent; they're effectively out of the market for a given manuscript.

There are some other stateful rules, we don't need all the details to see the potential complexities here.

A spreadsheet presents a particularly odious non-solution to the problem of state and state change. There's a good and a bad. Mostly bad.

We can try to spread history down the rows of a column. Wow this is bad. We can try to use the hierarchy features to make history a bunch of folded-up details underneath a heading row. This is microscopically better, but still difficult to manage with all the unfolding and folding required to change state after a rejection.

We can blow up a single cell to have non-atomic data -- all of the history with events and dates in a long, ";" delimited list.

There's no good way to represent this in a spreadsheet.

The rigidity of the SQL schema is a barrier here. We're dealing with some sloppy data handling practices in the legacy spreadsheet. We don't want to have to tweak the SQL each time we find some new subtlety that's poorly represented in the spreadsheet data.

We're also handling a number of data sources, each with a unique schema. We need a way to unify these flexibly, so we can fold in additional data sources, once the broken spreadsheet is behind us.

(There are a yet more problems with the relational model in general, those are material for a separate blog post. For now, the rigidity and complexity are a big enough pair of problems.)

We have an Agency as the primary document., Within an Agency, there are a number of individual Agents. Within each agent is a series of Events. Some Agents aren't even interested in the genre F. L. Stevens writes, so they're closed. Some Agents are temporarily closed. The rest are open.

The author can get a list of open agents, following a number of rules, including waiting after the last contact, and avoiding working with multiple agents within a single agency. After sending query letters, the event history gets an entry, and those agents are in another state, query pending.

One common complaint I hear about a document store is the "cost" of updating a large-ish document. The implicit assumption seems to be that an update operation can't locate the relevant sub-document, and can't make incremental changes. Having worked with both SQL and NoSQL, this "cost of document update" seems to be unmeasurably small.

Another cluster command question hovers around locking and concurrency. Most of them nonsensical because they come from the world of fragmented data in a SQL database. When the relevant object (i.e. Agency) is spread over a lot of rows of several tables, locking is essential. When the relevant object is a single document, locks aren't as important. If two people are updating the same document at the same time, that's a document design issue, or a control issue in the application.

Finally, there are questions about "update anomalies." This is a sensible question. In the relational world, we often have shared "lookup" data. A single change to a lookup row will have a ripple effect to all rows using the lookup row's foreign key.

Think of changing zip code 12345 from Schenectady, NY to Scotia, NY. Everyone sharing the foreign key reference via the zip code has been moved with a single update. Except, of course, nothing is visible until a query reconstructs the desired document from the fragmented pieces.

We've traded a rare sweeping updated across many documents for a sweeping, complex join operating to build the relevant document from the normalized pieces. Queries are expensive, complex, and often wrong. They're so painful, we use ORM's to mask the queries and give us the documents we wanted all along.

We have three classes here. Agency is the parent document. Each Agency contains one or more Agent instances. Each Agent contains one or more Events.

When we fetch an agent's data, we fetch the entire agency, since the "business" rules preclude querying more than one agent in an agency. The queries involve a nuanced state change: a rejection by one agent, opens another in the same agency. Rather than do some additional SQL queries to locate the parent and other children of the parent, just read the whole thing at once.

In later posts, we'll look at deduplication and some other processing. But this seems to be all the schema we'll ever need. The type hints provided mypy some evidence of what we intend to do with these documents.

Let's look at state changes.

The author needs URL's and names and a list of genres the agent is interested in. This is more-or-less static data. It changes rarely. What changes more often is an agent being closed or open to queries.

Another state change is the query itself. Once the email has been sent, the agent (and their agency) should not be bothered again for at least sixty days. After an explicit rejection, there's little point in making any contact with the agent; they're effectively out of the market for a given manuscript.

There are some other stateful rules, we don't need all the details to see the potential complexities here.

A spreadsheet presents a particularly odious non-solution to the problem of state and state change. There's a good and a bad. Mostly bad.

- On the good side, you can edit a single cell, changing the state. You can define a drop-down list of states, or radio buttons with alternative states.

- The be bad side, you're often limited to editing a single cell when you want to change the state. You want to have dates filled in automatically on state change. You want history of state changes. Excel hackers try to write macros to automate filling in the date. History, however... History is a problem.

We can try to spread history down the rows of a column. Wow this is bad. We can try to use the hierarchy features to make history a bunch of folded-up details underneath a heading row. This is microscopically better, but still difficult to manage with all the unfolding and folding required to change state after a rejection.

We can blow up a single cell to have non-atomic data -- all of the history with events and dates in a long, ";" delimited list.

There's no good way to represent this in a spreadsheet.

What to do?

The relational database people love the master-detail relationship. Agency has Agent. Agent has History. The history is a bunch of rows in the history table, with a foreign key relationship with the agent.The rigidity of the SQL schema is a barrier here. We're dealing with some sloppy data handling practices in the legacy spreadsheet. We don't want to have to tweak the SQL each time we find some new subtlety that's poorly represented in the spreadsheet data.

We're also handling a number of data sources, each with a unique schema. We need a way to unify these flexibly, so we can fold in additional data sources, once the broken spreadsheet is behind us.

(There are a yet more problems with the relational model in general, those are material for a separate blog post. For now, the rigidity and complexity are a big enough pair of problems.)

SQL is Out. What Else?

A document store is pretty nice for this. The rest of this section is an indictment of SQL. Feel free to skip it. It's widely known, and well supported elsewhere.We have an Agency as the primary document., Within an Agency, there are a number of individual Agents. Within each agent is a series of Events. Some Agents aren't even interested in the genre F. L. Stevens writes, so they're closed. Some Agents are temporarily closed. The rest are open.

The author can get a list of open agents, following a number of rules, including waiting after the last contact, and avoiding working with multiple agents within a single agency. After sending query letters, the event history gets an entry, and those agents are in another state, query pending.

One common complaint I hear about a document store is the "cost" of updating a large-ish document. The implicit assumption seems to be that an update operation can't locate the relevant sub-document, and can't make incremental changes. Having worked with both SQL and NoSQL, this "cost of document update" seems to be unmeasurably small.

Another cluster command question hovers around locking and concurrency. Most of them nonsensical because they come from the world of fragmented data in a SQL database. When the relevant object (i.e. Agency) is spread over a lot of rows of several tables, locking is essential. When the relevant object is a single document, locks aren't as important. If two people are updating the same document at the same time, that's a document design issue, or a control issue in the application.

Finally, there are questions about "update anomalies." This is a sensible question. In the relational world, we often have shared "lookup" data. A single change to a lookup row will have a ripple effect to all rows using the lookup row's foreign key.

Think of changing zip code 12345 from Schenectady, NY to Scotia, NY. Everyone sharing the foreign key reference via the zip code has been moved with a single update. Except, of course, nothing is visible until a query reconstructs the desired document from the fragmented pieces.

We've traded a rare sweeping updated across many documents for a sweeping, complex join operating to build the relevant document from the normalized pieces. Queries are expensive, complex, and often wrong. They're so painful, we use ORM's to mask the queries and give us the documents we wanted all along.

What's It Look Like?

This:@dataclass

class Agency:

"""A collection of individual agents."""

name : str

url : Optional[str] = field(default=None)

agents : Dict[str, 'Agent'] = field(init=False, default_factory=dict)

@dataclass

class Agent:

"""An Agent with a sequence of events: actions and state changes."""

name : str

url : str

email : str

fiction_genres : List[str]

query_details : str = field(default_factory=str)

events : List['Event'] = field(init=False, default_factory=list)

@dataclass

class Event:

"""An action or state change.

status = 'open', 'closed', 'query sent', 'query outcome', 'closed until', etc.

Depending on the status, there may be additional details.

For 'query sent', there's 'date'.

For 'query outcome', there's 'outcome' and an optional 'date'.

for 'closed until', there's 'reason' and an optional 'date'.

"""

status : str

date : Optional[datetime.date] = field(default=None)

outcome : Optional[str] = field(default=None)

reason : Optional[str] = field(default=None)

def __repr__(self):

return f"{self.status} {self.date} {self.outcome} {self.reason}"

We have three classes here. Agency is the parent document. Each Agency contains one or more Agent instances. Each Agent contains one or more Events.

When we fetch an agent's data, we fetch the entire agency, since the "business" rules preclude querying more than one agent in an agency. The queries involve a nuanced state change: a rejection by one agent, opens another in the same agency. Rather than do some additional SQL queries to locate the parent and other children of the parent, just read the whole thing at once.

In later posts, we'll look at deduplication and some other processing. But this seems to be all the schema we'll ever need. The type hints provided mypy some evidence of what we intend to do with these documents.

Tuesday, October 15, 2019

Apple's Numbers and the All-in-One CSV export

Author F. L. Stevens has a hellishly complex (and irregular) spreadsheet with agents, agencies, and query status. (This is how fiction gets marketed: querying agents.) The spreadsheet has become unmanageably complex, with multiple pages. Each page has multiple tables. Buried in this are three "interesting" tables with agent query information.

Can we talk about drama? There is the dark night of the soul for anyone interested in regular, normalized data.

We have some fundamental choices for working with this mess:

The prefix is a line with "Sheet: Table" Yes. There's a ": " (colon space) separator. The suffix is a simple blank line, essentially indistinguishable from a blank line within a table.

If the table was originally in strict first normal form (1NF) each row would have the same number of commas. If cells are merged, however, the number of commas can be fewer. This makes it potentially difficult to distinguish blank rows in a table from blank lines between tables.

It's generally easiest to ignore the blank lines entirely. We can distinguish table headers because they're a single cell with a sheet: table format. We are left hoping there aren't any tables that have values that have this format.

We have two ways to walk through the values:

Clearly, this is craziness.

Flattening is much nicer.

This provides a relatively simple way to find the relevant tables and sheets. We can use something as simple as the following to locate the relevant data.

This lets us write pleasant functions that handle exactly one row from the source table. We'll have one of these for each target table. In the above example, we've only shown two, you get the idea. Each new source table, with its unique headers can be accommodated.

Can we talk about drama? There is the dark night of the soul for anyone interested in regular, normalized data.

We have some fundamental choices for working with this mess:

- Export each relevant table to separate files. Lots of manual pointy-clicky and opportunities for making mistakes.

- Export the whole thing to separate files. Less pointy-clicky.

- Export the whole thing to one file. About the same pointy-clicky and error vulnerability as #2. But. Simpler still because there's one file to take care of. Something a fiction author should be able to handle.

The prefix is a line with "Sheet: Table" Yes. There's a ": " (colon space) separator. The suffix is a simple blank line, essentially indistinguishable from a blank line within a table.

If the table was originally in strict first normal form (1NF) each row would have the same number of commas. If cells are merged, however, the number of commas can be fewer. This makes it potentially difficult to distinguish blank rows in a table from blank lines between tables.

It's generally easiest to ignore the blank lines entirely. We can distinguish table headers because they're a single cell with a sheet: table format. We are left hoping there aren't any tables that have values that have this format.

We have two ways to walk through the values:

- Preserving the Sheet, Table, Row hierarchy. We can think of this as the for s in sheet: for t in table: for r in rows structure. The sheet iterator is Iterator[Tuple[str, Table_Iterator]]. The Table_Iterator is similar: Iterator[Tuple[str, Row_Iterator]]. The Row_Iterator, is the most granular Iterator[Dict[str, Any]].

- Flattening this into a sequence of "(Sheet name, Table Name, Row)" triples. Since a sheet and table have no other attributes beyond a name, this seems advantageous to me.

The hierarchical form requires a number of generator functions for Sheet-from-CSV, Table-from-CSV, and Row-from-CSV. Each of these works with a single underlying iterator over the source file and a fairly complex hand-off of state. If we only use the sheet iterator, the tables and rows are skipped. If we use the table within a sheet, the first table name comes from the header that started a sheet; the table names come from distinct headers until the sheet name changes.

The table-within-sheet iteration is very tricky. The first table is a simple yield of information gathered by the sheet iterator. Any subsequent tables, however, may be based one one of two conditions: either no rows have been consumed, in which case the table iterator consumes (and ignores) rows; or, all the rows of the table have been consumed and the current row is another "sheet: table" header.

The code sample below involves a fair amount of repetition. It's not appealing to refactor this because it's ungainly in its complexity, and doesn't create any tangible value. (I haven't even tried to get the type hints right.)

class SheetTable:

def __init__(self, source_path: Path) -> None:

self.path: Path = source_path

self.csv_source = None

self.rdr = None

self.header = None

self.row = None

def __enter__(self) -> None:

self.csv_source = self.path.open()

self.rdr = csv.reader(self.csv_source)

self.header = None

self.row = next(self.rdr)

return self

def __exit__(self, *args) -> None:

self.csv_source.close()

def _sheet_header(self) -> bool:

return len(self.row) == 1 and ': ' in self.row[0]

def sheet_iter(self):

while True:

while not (self._sheet_header()):

try:

self.row = next(self.rdr)

except StopIteration:

return

self.sheet, _, self.table = self.row[0].partition(": ")

self.header = next(self.rdr)

self.row = next(self.rdr)

yield self.sheet, self.table_iter()

def table_iter(self):

yield self.table, self.row_iter()

while not (self._sheet_header()):

try:

self.row = next(self.rdr)

except StopIteration:

return

next_sheet, _, next_table = self.row[0].partition(": ")

while next_sheet == self.sheet:

self.table = next_table

self.header = next(self.rdr)

self.row = next(self.rdr)

yield self.table, self.row_iter()

while not (self._sheet_header()):

try:

self.row = next(self.rdr)

except StopIteration:

return

next_sheet, _, next_table = self.row[0].partition(": ")

def row_iter(self):

while not self._sheet_header():

yield dict(zip(self.header, self.row))

try:

self.row = next(self.rdr)

except StopIteration:

return

Flattening is much nicer.

def sheet_table_iter(source_path: Path) -> Iterator[Tuple[str, str, Dict[str, Any]]]:

with source_path.open() as csv_source:

rdr = csv.reader(csv_source)

header = None

for row in rdr:

if len(row) == 0:

continue

elif len(row) == 1 and ": " in row[0]:

sheet, table = row[0].split(": ", maxsplit=1)

header = next(rdr)

continue

else:

# Inject headers to create dict from row

yield sheet, table, dict(zip(header, row))

This provides a relatively simple way to find the relevant tables and sheets. We can use something as simple as the following to locate the relevant data.

for sheet, table, row in sheet_table_iter(source_path):

if sheet == 'AgentQuery' and table == 'agent_query':

agent = agent_query_row(database, row)

elif sheet == 'AAR-2019-03' and table == 'Table 1':

agent = aar_2019_row(database, row)

This lets us write pleasant functions that handle exactly one row from the source table. We'll have one of these for each target table. In the above example, we've only shown two, you get the idea. Each new source table, with its unique headers can be accommodated.

Tuesday, October 8, 2019

Spreadsheet Regrets

I can't emphasize this enough.

Some people, when confronted with a problem, think

“I know, I'll use a spreadsheet.” Now they have two problems.

(This was originally about regular expressions. And AWK. See http://regex.info/blog/2006-09-15/247)

Fiction writer F. L. Stevens got a list of literary agents from AAR Online. This became a spreadsheet driving queries for representation. After a bunch of rejections, another query against AAR Online provided a second list of agents.

Apple's Numbers product will readily translate the AAR Online HTML table into a usable spreadsheet table. But after initial success the spreadsheet as tool of choice collapses into a pile of rubble. The spreadsheet data model is hopelessly ineffective for the problem domain.

What is the problem domain?

There are two user stories:

Some people, when confronted with a problem, think

“I know, I'll use a spreadsheet.” Now they have two problems.

(This was originally about regular expressions. And AWK. See http://regex.info/blog/2006-09-15/247)

Fiction writer F. L. Stevens got a list of literary agents from AAR Online. This became a spreadsheet driving queries for representation. After a bunch of rejections, another query against AAR Online provided a second list of agents.

Apple's Numbers product will readily translate the AAR Online HTML table into a usable spreadsheet table. But after initial success the spreadsheet as tool of choice collapses into a pile of rubble. The spreadsheet data model is hopelessly ineffective for the problem domain.

What is the problem domain?

There are two user stories:

- Author needs to deduplicate agents and agencies. It's considered poor form to badger agents with repeated queries for the same title. It's also bad form to query two agents at the same agency. You have to get rejected by one before contacting the other.

- Author needs to track activities at the Agent and Agency level to optimize querying. This mostly involves sending queries and tracking rejections. Ideally, an agent acceptance should lead to notification to other agents that the manuscript is being withdrawn. This is so rare as to not require much automation.

Agents come and go. Periodically, an agent will be closed to queries for some period of time, and then reopen. Their interests vary with the whims of the marketplace they're trying to serve. Traditional fiction publishing is quite complex; agents are the gatekeepers.

To an extent, we can decompose the processing like this.

1. Sourcing. There are several sources: AAR Online and Agent Query are two big sources. These sites have usable query engines and the HTML can be scraped to get a list of currently active agents with a uniform representation. This is elegant Python and Beautiful Soup.

2. Deduplication. Agency and Agent deduplication is central. Query results may involve state changes to an agent (open to queries, interested in new genres.) Query results may involve simple duplicates, which have to be discarded to avoid repeated queries. It's a huge pain when attempted with a spreadsheet. The simplistic string equality test for name matching is defeated by whitespace variations, for example. This is elegant Python, however.

3. Agent web site checks. These have to be done manually. Agency web pages are often art projects, larded up with javascript that produces elegant rolling animations of books, authors, agents, background art, and text. These sites aren't really set up to help authors. It's impossible to automate a check to confirm the source query results. This has to be done manually: F. L. is required to click and update status.

4. State Changes. Queries and Rejections are the important state changes. Open and Closed to queries is also part of the state that needs to be tracked. Additionally, there's a multiple agent per agency check that makes this more complex. The state changes are painful to track in a simple spreadsheet-like data structure: a rejection by one agent can free up another agent at the same agency. This multi-row state change is simply horrible to deal with.

Bonus confusion! Time-to-Live rules: a query over 60 days old is more-or-less a de facto rejection. This means that periodic scans of the data are required to close a query to one agent in an agency, freeing up subsequent agents in the same agency.

Bonus confusion! Time-to-Live rules: a query over 60 days old is more-or-less a de facto rejection. This means that periodic scans of the data are required to close a query to one agent in an agency, freeing up subsequent agents in the same agency.

Manuscript Wish Lists (MSWLs) are a source for agents actively searching for manuscripts. This is more-or-less a Twitter query. Using the various aggregating web sites seems slightly easier than using Twitter directly. However, additional Twitter lookups are required to locate agent details, so this is interesting web-scraping.

Of course F. L. Stevens has a legacy spreadsheet with at least four "similar" (but not really identical) tabs filled with agencies, agents, and query status.

Of course F. L. Stevens has a legacy spreadsheet with at least four "similar" (but not really identical) tabs filled with agencies, agents, and query status.

I don't have an implementation to share -- yet. I'm working on it slowly.

I think it will be an interesting tutorial in cleaning up semi-structured data.

I think it will be an interesting tutorial in cleaning up semi-structured data.

Tuesday, September 10, 2019

Packt Data Unlocked Promotion

See http://bit.ly/DataUnlockedTwitter

You can't go wrong with these kinds of discounts.

#DataUnlocked

You can't go wrong with these kinds of discounts.

#DataUnlocked

Tuesday, September 3, 2019

Finally Planning the Rewrite of Building Skills in Object-Oriented Design

See Building Skills in Object-Oriented Design for the old content, which has a number of features that hold up well over time.

- A graduated series of exercises to build up large, complete applications is important.

- It covers a lot of skills essential to building real applications -- unit testing, integration, code reuse. I want to expand on this to include more testing strategies, and final documentation.

- It's so popular, I've got enough donations to move forward on a rewrite.

Previously, it was hosted out of my ancient web site as HTML and PDF download. That hasn't aged well.

Also, it was originally Python 2, and that ship sailed years ago.

I'm leaning toward hosting the content on GitHub.

One idea is to have a complex project with the following top-level folders:

- A docs folder that has the HTML as well as PDF (and maybe an ebook format, too.)

- A src folder with seed files for the various packages and modules.

- A tests folder with seed tests.

Someone could fork and then build on the framework.

It's possible to put the exposition into the wiki pages associated with the repo. This has the advantage of keeping the meta-level documentation and individual project requirements separate from the project itself.

Before I go too far, I'll need to experiment a bit to see what the editing process is like. The Github wiki pages are their own git branch, and are easy to edit off-line and push to the repo. Some of the fancy Sphinx markup features vanish, replaced with basic RST. This may not be all bad, since the baseline content is not *very* complex.

Stand by for more.

Tuesday, August 13, 2019

Coping with Windows via AWS

For a training class, I needed to address The Windows Problem™. TWP is the my summary of all the non-standard features of Windows in its various inconsistent incarnations.

Any training class that involves "install Python" inevitably involves at least one Windows user who can't get their PATH set correctly. It's an eternal mystery to me, since the installers all seem to take care of this, but, some people are able to click the wrong thing somewhere in a simple installation.

The uninstall and start again sometimes helps. Having a Windows expert in the room sometimes helps.

(The hapless flailing I sometimes observe is my personal problem to deal with. I should not let myself get short-tempered with people who try things exactly once and then stop, unable to discern a single branch in the path they chose. It's as if the sequence of dialog boxes with different choices never existed for them, and I need to respect the fact that they did not read the messages and did not think of themselves as actively making choices.)

I have to say that being able to get a Windows machine in free tier of AWS is a wonderful resource.

I can screen capture the installation on Windows.

I can narrate the sequence of choices I made. I'm hoping this prevents TWP from side-tracking a person who's struggling with Windows.

I haven't used the movies yet. But it's so handy to be able to spin up a Windows machine in the cloud and run the Conda install. It's a whole lot nicer than buying a throw-away Windows machine to do screen shots on.

Any training class that involves "install Python" inevitably involves at least one Windows user who can't get their PATH set correctly. It's an eternal mystery to me, since the installers all seem to take care of this, but, some people are able to click the wrong thing somewhere in a simple installation.

The uninstall and start again sometimes helps. Having a Windows expert in the room sometimes helps.

(The hapless flailing I sometimes observe is my personal problem to deal with. I should not let myself get short-tempered with people who try things exactly once and then stop, unable to discern a single branch in the path they chose. It's as if the sequence of dialog boxes with different choices never existed for them, and I need to respect the fact that they did not read the messages and did not think of themselves as actively making choices.)

I have to say that being able to get a Windows machine in free tier of AWS is a wonderful resource.

I can screen capture the installation on Windows.

I can narrate the sequence of choices I made. I'm hoping this prevents TWP from side-tracking a person who's struggling with Windows.

I haven't used the movies yet. But it's so handy to be able to spin up a Windows machine in the cloud and run the Conda install. It's a whole lot nicer than buying a throw-away Windows machine to do screen shots on.

Friday, July 5, 2019

Mastering Object-Oriented Python 2nd ed

The book https://www.packtpub.com/programming/mastering-object-oriented-python-second-edition

Some new chapters.

Type hints almost everywhere.

If you want to write a review, DM me on twitter @s_lott I can add you to the list for freebies in exchange for a review.

Thursday, June 20, 2019

HumbleBundle -- Functional Python Programming -- Through July 1

See this https://www.humblebundle.com

This is amazing to me.

Humble Bundle sells games, ebooks, software, and other digital content. Our mission is to support charity while providing awesome content to customers at great prices. We launched in 2010 with a single two-week Humble Indie Bundle, but we have humbly grown into a store full of games and bundles, a subscription service, a game publisher, and more.

Currently, Packt is offering some of my books.

Want a *ton* of technical books and donate to charity?

Click Now. Thank me later.

Tuesday, June 18, 2019

The Pythonista app for iPad

Let me start my review with "wow!"

Python 3.6 on the iPad. Works. Nicely. Easy to use. Reliable. Rock-Solid.

I'm not switching to iPad as my primary platform any time in the near future. But. For certain kinds of small and tightly focused hackery, this is really nice.

I use a bracket to hold the iPad up and an external keyboard. I can be used with the on-screen keyboard, but, that's slow-going for me.

Here's the thing that was exquisitely simple in Pythonista:

Python 3.6 on the iPad. Works. Nicely. Easy to use. Reliable. Rock-Solid.

I'm not switching to iPad as my primary platform any time in the near future. But. For certain kinds of small and tightly focused hackery, this is really nice.

I use a bracket to hold the iPad up and an external keyboard. I can be used with the on-screen keyboard, but, that's slow-going for me.

Here's the thing that was exquisitely simple in Pythonista:

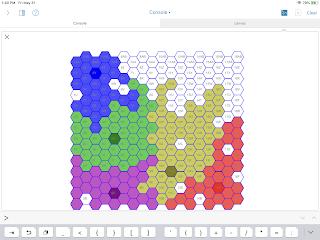

I'm able to draw a hex grid ("Flat Top", "Double Height") in a few dozen lines of code. This includes a bunch of geometry rules like adjacency and directional movement.

The Pythonista package includes a super-easy-to-use

Given a HexGrid instance, I can then create "cities" and their surrounding territories in an "empire". I've tried a few organic growth algorithms, and I like the look of these maps. They provide a lot of avenues for conflict for writing fiction or playing role-playing games.

Some of the algorithmic foundations: https://www.redblobgames.com/grids/hexagons/.

It's pretty slow. No surprise there. It's running on an iPad.

It's pretty easy to work on. Whip out the iPad and start coding.

The super-easy, built-in

I can see having an intro to programming class where the fee includes an extra $800 for the iPad you take home along with your new-found skills in basic coding. (This is still a *lot* of money, but it's less than a full laptop.)

However, there are still some "Edge" cases that are challenging.

Location 12D reflects a hole on a border. These are interesting, and could be the seed for epic wargaming, role-playing game, novel-writing drama. A simple algorithm can find these and assign a random owner... But... They really need a special "On Fire" color scheme to show the potential for drama.

There's a subtlety in the upper-left corner (5W and 6V) between Blue and Green. While these seem like simple border holes, each hole as only five of six required neighbors.

Compare these with 16P in the upper-right corner. This also has five of six neighbors. However, this space looks like it could be a bay leading up to a river and the river is a natural border between nations.

The head of the bay at 16P has 5 neighbors of two colors, similar to 5W and 6V. The difference can be detected by a recursive walk to see if a hole is connected to other holes and the composite is actually surrounded. There are lots of *edge* cases, but the (5W, 6V) pair seems to embody the next stage of surround detection.

This more nuanced algorithm design doesn't work out well in the Pythonista environment. This algorithm design requires careful unit tests, not the code-and-run cycle of hackery. For this kind of careful design, we'd need to leverage

If you're an iPad user, consider adding Pythonista. You can really write real Python. It's a useful environment. It's fun for teaching.

The Pythonista package includes a super-easy-to-use

canvas module that's a tiny bit simpler than turtle graphics. It takes a bit of getting used to, but it has enough graphics primitives to make it easy to create hexagons and tile the surface.Given a HexGrid instance, I can then create "cities" and their surrounding territories in an "empire". I've tried a few organic growth algorithms, and I like the look of these maps. They provide a lot of avenues for conflict for writing fiction or playing role-playing games.

Some of the algorithmic foundations: https://www.redblobgames.com/grids/hexagons/.

Fun Hackery

This is fun hackery because I can change the code, click the run icon, and watch the consequence of the changes. A traceback is highlighted in the original file. Easy. Fun.It's pretty slow. No surprise there. It's running on an iPad.

It's pretty easy to work on. Whip out the iPad and start coding.

The super-easy, built-in

canvas module means feedback is instant and gratifying.I can see having an intro to programming class where the fee includes an extra $800 for the iPad you take home along with your new-found skills in basic coding. (This is still a *lot* of money, but it's less than a full laptop.)

Filling in the Holes

Looking at the output, you can see the growth algorithm left some unfilled holes. A later version examines all unfilled spaces to see if they're entirely surrounded by one color and fills them. This is a fun algorithm because it works in a simple way with the adjacency iterator and the set of locations covered by a city. Locations 12L and 17K are these "Simply Surrounded Single Holes."However, there are still some "Edge" cases that are challenging.

Location 12D reflects a hole on a border. These are interesting, and could be the seed for epic wargaming, role-playing game, novel-writing drama. A simple algorithm can find these and assign a random owner... But... They really need a special "On Fire" color scheme to show the potential for drama.

There's a subtlety in the upper-left corner (5W and 6V) between Blue and Green. While these seem like simple border holes, each hole as only five of six required neighbors.

Compare these with 16P in the upper-right corner. This also has five of six neighbors. However, this space looks like it could be a bay leading up to a river and the river is a natural border between nations.

The head of the bay at 16P has 5 neighbors of two colors, similar to 5W and 6V. The difference can be detected by a recursive walk to see if a hole is connected to other holes and the composite is actually surrounded. There are lots of *edge* cases, but the (5W, 6V) pair seems to embody the next stage of surround detection.

This more nuanced algorithm design doesn't work out well in the Pythonista environment. This algorithm design requires careful unit tests, not the code-and-run cycle of hackery. For this kind of careful design, we'd need to leverage

doctest (or unittest) for testing. While I'd like pytest, that's a lot to ask for. For these kinds of apps, doctest is more than adequate, and a simple import doctest; doctest.testmod() in a scrip can help be sure things work as expected.tl;dr

If you're an iPad user, consider adding Pythonista. You can really write real Python. It's a useful environment. It's fun for teaching.

Tuesday, June 11, 2019

Circuit Python on the Gemma M0 -- The Red Ranger Beacon

PyCon 2018 Swag included a Gemma m0. (https://www.adafruit.com/product/3501)

PyCon 2019 Swag included a Circuit Playground Express. See https://learn.adafruit.com/adafruit-circuit-playground-express/circuitpython-quickstart.

Both of these are (to me) amazing. They mount as USB devices; there’s a code.py file that’s automatically run when the board restarts.

The Gemma has fairly few pins and does some real simple things. The CPX has a bunch of pins and ton of hardware on the board. Buttons, Switches, LED’s, motion sensor, temperature, brightness... I’ve lost count.

Few things are more frustrating than making a mistake and being unable to restore the original functionality as a check to be sure things are still working.

Also. At some point, you'll want to upgrade the OS on the chip. This will require you to have a bootable image. The process isn't complex, but it does require some care. See https://learn.adafruit.com/welcome-to-circuitpython/installing-circuitpython#download-the-latest-version-3-4 for downloading a new OS.

So. Step 1a. Create a local folder for your projects. Within that folder, create a folder for each project. Put the relevant code.py into the sub-folder. Like this

PyCon 2019 Swag included a Circuit Playground Express. See https://learn.adafruit.com/adafruit-circuit-playground-express/circuitpython-quickstart.

Both of these are (to me) amazing. They mount as USB devices; there’s a code.py file that’s automatically run when the board restarts.

The Gemma has fairly few pins and does some real simple things. The CPX has a bunch of pins and ton of hardware on the board. Buttons, Switches, LED’s, motion sensor, temperature, brightness... I’ve lost count.

Step 1 -- Get Organized

Create a proper project directory on your local machine. Yes, you can hack thecode.py file immediately, but you should consider making a backup before you start making changes.Few things are more frustrating than making a mistake and being unable to restore the original functionality as a check to be sure things are still working.

Also. At some point, you'll want to upgrade the OS on the chip. This will require you to have a bootable image. The process isn't complex, but it does require some care. See https://learn.adafruit.com/welcome-to-circuitpython/installing-circuitpython#download-the-latest-version-3-4 for downloading a new OS.

So. Step 1a. Create a local folder for your projects. Within that folder, create a folder for each project. Put the relevant code.py into the sub-folder. Like this

gemma ┣━━ baseline ┃ ┗━━ code.py ┣━━ my-first-project ┃ ┗━━ code.py ┣━━ another-project ┃ ┗━━ code.py ┗━━ os-upgrade ┗━━ other files...

See https://learn.adafruit.com/adafruit-gemma-m0/troubleshooting for additional help if you have a Windows PC.

A lot of folks like the Mu editor for this. https://codewith.mu.

I like using BBEdit. https://www.barebones.com/products/bbedit/. To make this work I *also* need to have

This is a lot of busy screen real-estate with three separate apps open. It's not for everyone. I like it because there's little hand-holding. You may prefer Mu.

It's important to go through the edit/download/play cycle many times to be sure you're clear on what code's on your PC and what code's on your board.

It's even more important to see how you're forced to debug syntax errors using the screen app until you invent a suitable mock library for off-line unit testing.

However.

It's also a very small processor, with very few pins, so you can't do anything super elaborate. You can, however, do quite a bit.

See Nina Zakharenko - Keynote - PyCon 2019 for some inspiration

Many thanks to @nnja for showing us some elegant, inspirational ideas.

Step 2 -- Start Small

Tweak a few things in the suppliedcode.py if you're new to IoT stuff.A lot of folks like the Mu editor for this. https://codewith.mu.

I like using BBEdit. https://www.barebones.com/products/bbedit/. To make this work I *also* need to have

- a terminal window open so I can use the Mac OS screen application, and

- a finder window open to copy the code.py from my PC to the Gemma M0.

This is a lot of busy screen real-estate with three separate apps open. It's not for everyone. I like it because there's little hand-holding. You may prefer Mu.

It's important to go through the edit/download/play cycle many times to be sure you're clear on what code's on your PC and what code's on your board.

It's even more important to see how you're forced to debug syntax errors using the screen app until you invent a suitable mock library for off-line unit testing.

Step 3 -- Plan Carefully

The version of Python is remarkably complete.However.

It's also a very small processor, with very few pins, so you can't do anything super elaborate. You can, however, do quite a bit.

See Nina Zakharenko - Keynote - PyCon 2019 for some inspiration

Step 4 -- Check This Out

https://github.com/slott56/gemma-boat-beaconMany thanks to @nnja for showing us some elegant, inspirational ideas.

Tuesday, June 4, 2019

Probabilistic Data Structures

Interesting data structures with O(n) performance. This can help to unscramble O(n²) problems allowing progress.

https://pdsa.readthedocs.io/en/latest/index.html

https://pdsa.readthedocs.io/en/latest/index.html

Tuesday, May 28, 2019

Rules for Debugging

Here's the situation.

Someone wrote code. It didn't do what they assumed it would do.

They come to me for help.

Here are my rules for debugging. All of them.

1. Try something else.

I don't have any other or more clever advice. When I look at someone's broken code, I'm going to suggest the only thing I know. Try something else.

I can't magically make broken code work. Code's not like that. If it doesn't work, it's wrong, usually in a foundational way. Usually, the author made an assumption, followed through on that assumption, and was astonished it didn't work.

A consequence of this will be massive changes to the broken code. Foundational changes.

Debugging is a matter of finding and discarding assumptions. This can be hard. Some people think their assumptions are solid gold and write long angry blog posts about how a language or platform or library is "broken."

The rest of us try something different.

My personal technique is to cite evidence for everything I think I'm observing. Sometimes, I actually write it down -- on paper -- next to the computer. (Sometimes I use the Mac OS Notes app.) Which lines of code. Which library links. Sometimes, i'll include in the code as references to documentation pages.

Evidence is required to destroy assumptions. Destroying assumptions is the essence of debugging.

I'm often amazed that someone can try to use multiprocessing.apply_async() without reading any of the example code. What I'm guessing is that assumptions trump research, making them solid gold, and not subject to questioning or locating evidence. In the case of multiprocessing, it's important to look at code which appears broken and compare it, line-by-line with example code that works.

Comparing broken code with relevant examples is -- in effect -- trying something else. The original didn't work. So... Go to something that does work and compare the two to identify the differences.

Someone wrote code. It didn't do what they assumed it would do.

They come to me for help.

Here are my rules for debugging. All of them.

1. Try something else.

I don't have any other or more clever advice. When I look at someone's broken code, I'm going to suggest the only thing I know. Try something else.

I can't magically make broken code work. Code's not like that. If it doesn't work, it's wrong, usually in a foundational way. Usually, the author made an assumption, followed through on that assumption, and was astonished it didn't work.

A consequence of this will be massive changes to the broken code. Foundational changes.

When you make an assumption, you make an "ass" out of "u" and "mption".

Debugging is a matter of finding and discarding assumptions. This can be hard. Some people think their assumptions are solid gold and write long angry blog posts about how a language or platform or library is "broken."

The rest of us try something different.

My personal technique is to cite evidence for everything I think I'm observing. Sometimes, I actually write it down -- on paper -- next to the computer. (Sometimes I use the Mac OS Notes app.) Which lines of code. Which library links. Sometimes, i'll include in the code as references to documentation pages.

Evidence is required to destroy assumptions. Destroying assumptions is the essence of debugging.

Sources of Assumptions

I'm often amazed at how many people don't read the "But on Windows..." parts of the Python documentation. Somehow, they're willing to assume -- without evidence -- that Windows is POSIX-compliant and behaves like Linux. When things don't follow their assumed behavior, and they're using Windows, it often seems like they've compounded a bunch of personal assumptions. I don't have too much patience at this point: the debugging is going to be hard.I'm often amazed that someone can try to use multiprocessing.apply_async() without reading any of the example code. What I'm guessing is that assumptions trump research, making them solid gold, and not subject to questioning or locating evidence. In the case of multiprocessing, it's important to look at code which appears broken and compare it, line-by-line with example code that works.

Comparing broken code with relevant examples is -- in effect -- trying something else. The original didn't work. So... Go to something that does work and compare the two to identify the differences.

Tuesday, May 21, 2019

Tuesday, May 14, 2019

PyCon 2019

There are some things I could say.

But.

You can come to understand it yourself, also.

Go here: https://www.youtube.com/channel/UCxs2IIVXaEHHA4BtTiWZ2mQ

Start with the keynotes. https://www.youtube.com/channel/UCxs2IIVXaEHHA4BtTiWZ2mQ/search?query=keynote

For me, one of the top presentations was this https://www.youtube.com/watch?v=9G2s1TN9QQY

There are several closely related, but I found this very helpful.

But.

You can come to understand it yourself, also.

Go here: https://www.youtube.com/channel/UCxs2IIVXaEHHA4BtTiWZ2mQ

Start with the keynotes. https://www.youtube.com/channel/UCxs2IIVXaEHHA4BtTiWZ2mQ/search?query=keynote

For me, one of the top presentations was this https://www.youtube.com/watch?v=9G2s1TN9QQY